Automatic build fix suggestions with Vercel Agent

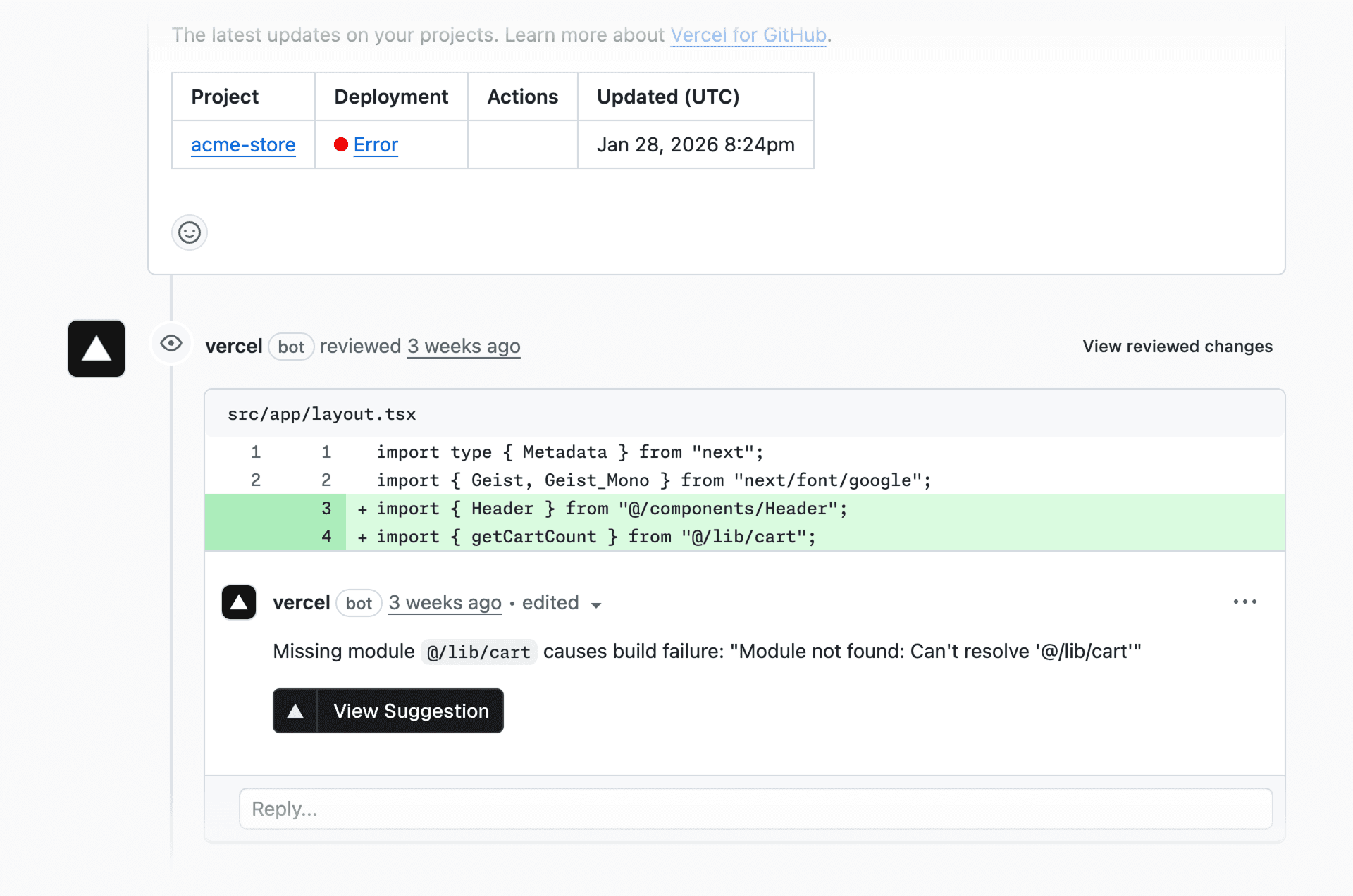

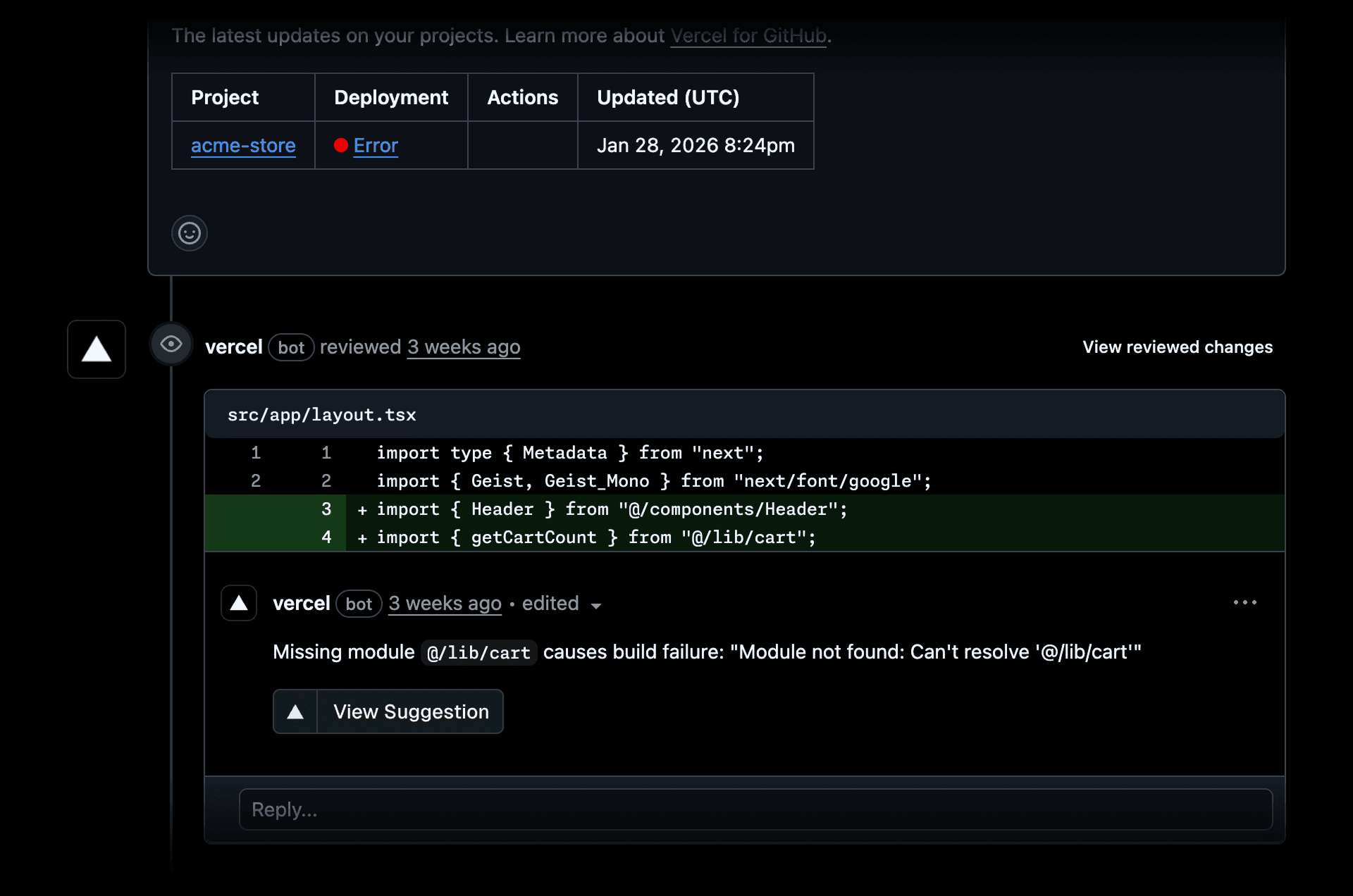

You can now get automatic code-fix suggestions for broken builds from the Vercel Agent, directly in GitHub pull request reviews or in the Vercel Dashboard.

When the Vercel Agent reviews your pull request, it now scans your deployments for build errors, and when it detects failures it automatically suggests a code fix based on your code and build logs.

In addition, Vercel Agent can automatically suggest code fixes inside the Vercel dashboard whenever a build error is detected, and suggests a code change to a GitHub Pull Request for review before merging with your code.

Get started with Vercel Agent code review in the Agent dashboard, or learn more in the documentation.